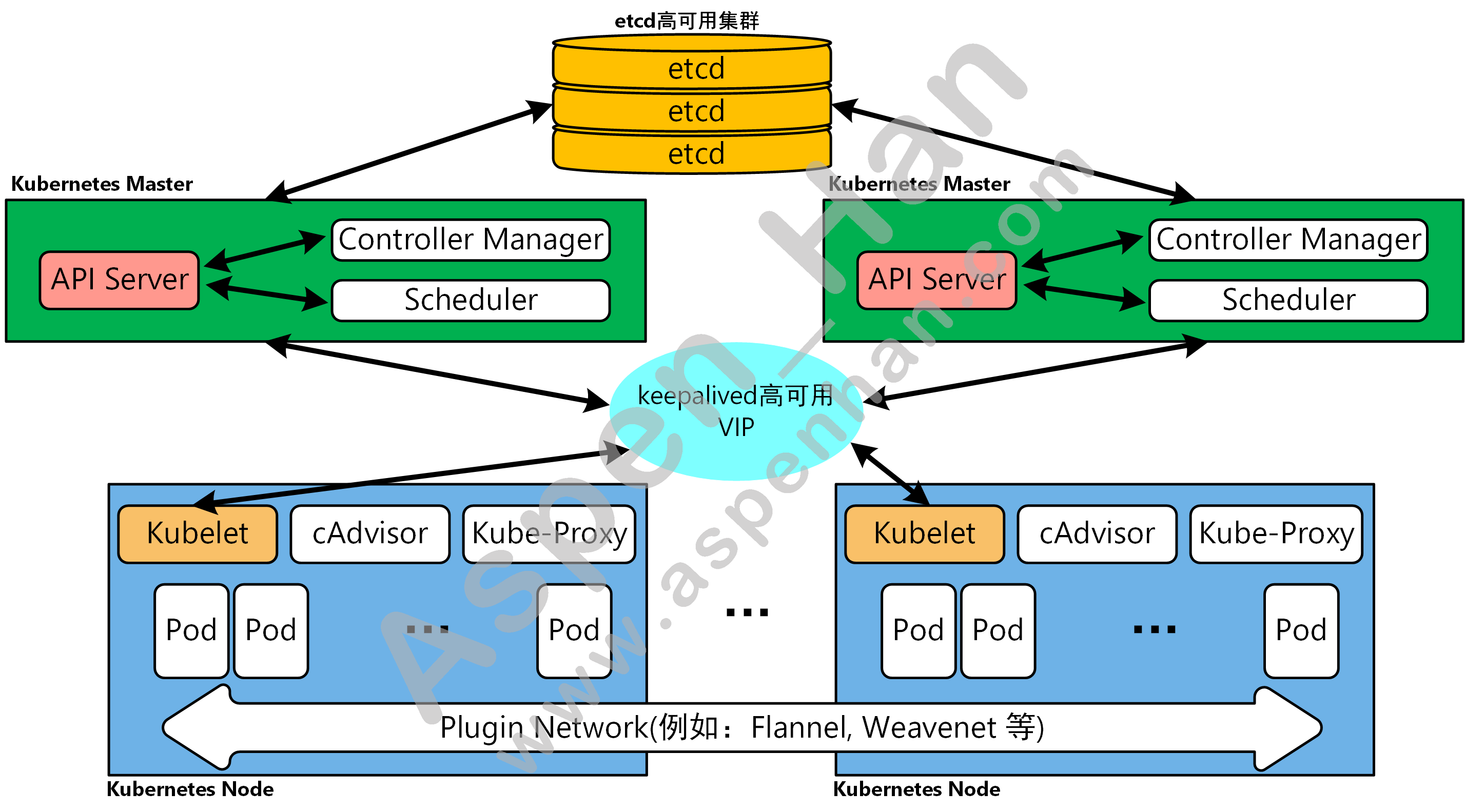

一、 工作原理

二、 搭建etcd集群

1. 清理环境

|

systemctl stop etcd.service #停止etcd服务 rm -fr /var/lib/etcd/* #清空初始化数据目录 |

[root@k8s-master ~]# rm -rf /var/lib/etcd/*

[root@k8s-master ~]# ll /var/lib/etcd/

total 02. 部署服务

step 1 安装etcd服务

| yum install -y etcd |

[root@k8s-node01 ~]# yum install -y etcd.x86_64

......

Installed:

etcd.x86_64 0:3.3.11-2.el7.centos

Complete![root@k8s-registry ~]# yum install -y etcd.x86_64

......

Installed:

etcd.x86_64 0:3.3.11-2.el7.centos

Complete!step 2 配置etcd集群

|

# /etc/etcd/etcd.conf #[Members] ETCD_DATA_DIR="/var/lib/etcd/目录" #指定初始化数据目录 ETCD_LISTEN_PEER_URLS="http://0.0.0.0:2380" #节点间通信监听端口 ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379" #对外访问监听端口 ETCD_NAME="节点名称" #定义节点名称 #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="http://IP:2380" ETCD_ADVERTISE_CLIENT_URLS="http://IP:2379" ETCD_INITIAL_CLUSTER="node1=http://IP:端口,node2=http://IP:端口,...,node[n]=http://IP:端口" #默认端口:2380 ETCD_INITIAL_CLUSTER_TOKEN="指令" ETCD_INITIAL_CLUSTER_STATE="new" |

[root@k8s-master ~]# grep -Ev '^$|#' /etc/etcd/etcd.conf

ETCD_DATA_DIR="/var/lib/etcd/"

ETCD_LISTEN_PEER_URLS="http://0.0.0.0:2380"

ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"

ETCD_NAME="etcd_node1"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://10.0.0.110:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://10.0.0.110:2379"

ETCD_INITIAL_CLUSTER="etcd_node1=http://10.0.0.110:2380,etcd_node2=http://10.0.0.120:2380,etcd_node3=http://10.0.0.140:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

[root@k8s-master ~]# scp -rp /etc/etcd/etcd.conf 10.0.0.120:/etc/etcd/etcd.conf

root@10.0.0.120's password:

etcd.conf 100% 1742 2.4MB/s 00:00

[root@k8s-master ~]# scp -rp /etc/etcd/etcd.conf 10.0.0.140:/etc/etcd/etcd.conf

root@10.0.0.140's password:

etcd.conf [root@k8s-node01 ~]# grep -Ev '^$|#' /etc/etcd/etcd.conf

ETCD_DATA_DIR="/var/lib/etcd/"

ETCD_LISTEN_PEER_URLS="http://0.0.0.0:2380"

ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"

ETCD_NAME="etcd_node2"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://10.0.0.120:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://10.0.0.120:2379"

ETCD_INITIAL_CLUSTER="etcd_node1=http://10.0.0.110:2380,etcd_node2=http://10.0.0.120:2380,etcd_node3=http://10.0.0.140:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"[root@k8s-registry ~]# grep -Ev '^$|#' /etc/etcd/etcd.conf

ETCD_DATA_DIR="/var/lib/etcd/"

ETCD_LISTEN_PEER_URLS="http://0.0.0.0:2380"

ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"

ETCD_NAME="etcd_node3"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://10.0.0.140:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://10.0.0.140:2379"

ETCD_INITIAL_CLUSTER="etcd_node1=http://10.0.0.110:2380,etcd_node2=http://10.0.0.120:2380,etcd_node3=http://10.0.0.140:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"step 3 启动etcd服务

| systemctl restart etcd |

[root@k8s-master ~]# systemctl restart etcd.service

[root@k8s-master ~]# systemctl status etcd.service

● etcd.service - Etcd Server

Loaded: loaded (/usr/lib/systemd/system/etcd.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2021-07-23 14:19:21 CST; 22s ago

Main PID: 8007 (etcd)

CGroup: /system.slice/etcd.service

└─8007 /usr/bin/etcd --name=etcd_node1 --data-dir=/var/lib/etcd/ --listen-client-urls=http:..

......[root@k8s-node01 ~]# systemctl restart etcd.service

[root@k8s-node01 ~]# systemctl status etcd.service

● etcd.service - Etcd Server

Loaded: loaded (/usr/lib/systemd/system/etcd.service; disabled; vendor preset: disabled)

Active: active (running) since Fri 2021-07-23 14:19:27 CST; 39s ago

Main PID: 27613 (etcd)

Tasks: 8

Memory: 22.4M

CGroup: /system.slice/etcd.service

└─27613 /usr/bin/etcd --name=etcd_node2 --data-dir=/var/lib/etcd/ --listen-client-urls=http..

......[root@k8s-registry ~]# systemctl restart etcd.service

[root@k8s-registry ~]# systemctl status etcd.service

● etcd.service - Etcd Server

Loaded: loaded (/usr/lib/systemd/system/etcd.service; disabled; vendor preset: disabled)

Active: active (running) since Fri 2021-07-23 14:19:21 CST; 1min 18s ago

Main PID: 47095 (etcd)

Tasks: 8

Memory: 23.2M

CGroup: /system.slice/etcd.service

└─47095 /usr/bin/etcd --name=etcd_node3 --data-dir=/var/lib/etcd/ --listen-client-urls=http...

......step 4 检测etcd服务

| etcdctl cluster-health |

[root@k8s-master ~]# etcdctl -C http://10.0.0.110:2379 cluster-health

member 2a864251cde86ff0 is healthy: got healthy result from http://10.0.0.110:2379

member c1583a2151ea4303 is healthy: got healthy result from http://10.0.0.140:2379

member fc5b819f06c6532e is healthy: got healthy result from http://10.0.0.120:2379

cluster is healthy[root@k8s-node01 ~]# etcdctl -C http://10.0.0.120:2379 cluster-health

member 2a864251cde86ff0 is healthy: got healthy result from http://10.0.0.110:2379

member c1583a2151ea4303 is healthy: got healthy result from http://10.0.0.140:2379

member fc5b819f06c6532e is healthy: got healthy result from http://10.0.0.120:2379

cluster is healthy[root@k8s-registry ~]# etcdctl cluster-health

member 2a864251cde86ff0 is healthy: got healthy result from http://10.0.0.110:2379

member c1583a2151ea4303 is healthy: got healthy result from http://10.0.0.140:2379

member fc5b819f06c6532e is healthy: got healthy result from http://10.0.0.120:2379

cluster is healthystep 5 调整flanneld配置文件

|

# /etc/sysconfig/flanneld FLANNEL_ETCD_ENDPOINTS="http://IP:端口,http://IP:端口,http://IP:端口, ... " #默认端口:2379 FLANNEL_ETCD_PREFIX="名称" |

[root@k8s-master ~]# grep -Ev '^$|#' /etc/sysconfig/flanneld

FLANNEL_ETCD_ENDPOINTS="http://10.0.0.110:2379,http://10.0.0.120:2379,http://10.0.0.130:2379"

FLANNEL_ETCD_PREFIX="/aspenhan.com/network"

[root@k8s-master ~]# scp -rp /etc/sysconfig/flanneld 10.0.0.120:/etc/sysconfig/flanneld

root@10.0.0.120's password:

flanneld 100% 410 444.2KB/s 00:00

lost connection

root@10.0.0.130's password:

flanneld [root@k8s-node01 ~]# grep -Ev '^$|#' /etc/sysconfig/flanneld

FLANNEL_ETCD_ENDPOINTS="http://10.0.0.110:2379,http://10.0.0.120:2379,http://10.0.0.130:2379"

FLANNEL_ETCD_PREFIX="/aspenhan.com/network"[root@k8s-node02 ~]# grep -Ev '^$|#' /etc/sysconfig/flanneld

FLANNEL_ETCD_ENDPOINTS="http://10.0.0.110:2379,http://10.0.0.120:2379,http://10.0.0.130:2379"

FLANNEL_ETCD_PREFIX="/aspenhan.com/network"step 6 设置网络地址范围

|

#master节点 etcdctl mk /前缀名称/config '{ "Network": "IP地址/掩码" }' |

[root@k8s-master ~]# etcdctl mk /aspenhan.com/network/config '{"Network":"192.168.0.0/16"}'

{"Network":"192.168.0.0/16"}step 7 启动flannel服务和docker服务

|

systemctl restart flanneld systemctl restart docker |

[root@k8s-master ~]# systemctl restart flanneld.service

[root@k8s-master ~]# systemctl restart docker[root@k8s-node01 ~]# systemctl restart flanneld.service

[root@k8s-node01 ~]# systemctl restart docker[root@k8s-node02 ~]# systemctl restart flanneld.service

[root@k8s-node02 ~]# systemctl restart docker三、 Master节点部署

1. 清理环境

| yum remove -y kubernetes-node |

[root@k8s-registry ~]# yum remove kubernete-node

Loaded plugins: fastestmirror, product-id, search-disabled-repos, subscription-manager

This system is not registered with an entitlement server. You can use subscription-manager to register.

No Match for argument: kubernete-node

No Packages marked for removal2. 部署服务

step 1 安装kubernetes服务

| yum install -y kubernetes-master |

[root@k8s-master ~]# yum install -y kubernetes-master

Loaded plugins: fastestmirror, product-id, search-disabled-repos, subscription-manager

......

Package kubernetes-master-1.5.2-0.7.git269f928.el7.x86_64 already installed and latest version

Nothing to do[root@k8s-registry ~]# yum install -y kubernetes-master.x86_64

Loaded plugins: fastestmirror, product-id, search-disabled-repos, subscription-manager

......

Installed:

kubernetes-master.x86_64 0:1.5.2-0.7.git269f928.el7

Dependency Installed:

kubernetes-client.x86_64 0:1.5.2-0.7.git269f928.el7

Complete!step 2 配置apiserver

|

# /etc/kubernetes/apiserver KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0" #指定API Server监听地址 KUBE_API_PORT="--port=端口" #指定API Server监听端口,默认为8080 KUBELET_PORT="--kubelet-port=端口" #指定Kubelet服务管理端口,默认为10250 KUBE_ETCD_SERVERS="--etcd-servers=http://IP地址:端口,http://IP地址:端口,http://IP地址:端口, ..." #指定etcd服务管理地址和端口(默认端口2379) KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=IP地址/掩码" #指定负载均衡vip地址的范围 KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota" |

[root@k8s-master ~]# grep -Ev '^$|#' /etc/kubernetes/apiserver

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

KUBE_API_PORT="--port=10000"

KUBELET_PORT="--kubelet-port=10250"

KUBE_ETCD_SERVERS="--etcd-servers=http://10.0.0.110:2379,http://10.0.0.120:2379,http://10.0.0.140:2379"

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.100.0.0/16"

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota"

KUBE_API_ARGS="--service-node-port-range=3000-50000"

[root@k8s-master ~]# scp -rp /etc/kubernetes/apiserver 10.0.0.140://etc/kubernetes/apiserver

root@10.0.0.140's password:

apiserver [root@k8s-registry ~]# grep -Ev '^$|#' /etc/kubernetes/apiserver

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

KUBE_API_PORT="--port=10000"

KUBELET_PORT="--kubelet-port=10250"

KUBE_ETCD_SERVERS="--etcd-servers=http://10.0.0.110:2379,http://10.0.0.120:2379,http://10.0.0.140:2379"

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.100.0.0/16"

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota"

KUBE_API_ARGS="--service-node-port-range=3000-50000"step 3 配置controller manager和scheduler

|

# /etc/kubernetes/config KUBE_MASTER="--master=http://127.0.0.1:端口" #指定API Server地址和端口(默认端口8080) |

[root@k8s-master ~]# grep -Ev '^$|#' /etc/kubernetes/config

KUBE_LOGTOSTDERR="--logtostderr=true"

KUBE_LOG_LEVEL="--v=0"

KUBE_ALLOW_PRIV="--allow-privileged=false"

KUBE_MASTER="--master=http://127.0.0.1:10000"

[root@k8s-master ~]# scp -rp /etc/kubernetes/config 10.0.0.140://etc/kubernetes/config

root@10.0.0.140's password:

config 100% 656 830.7KB/s 00:00 [root@k8s-registry ~]# grep -Ev '^$|#' /etc/kubernetes/config

KUBE_LOGTOSTDERR="--logtostderr=true"

KUBE_LOG_LEVEL="--v=0"

KUBE_ALLOW_PRIV="--allow-privileged=false"

KUBE_MASTER="--master=http://127.0.0.1:10000"step 4 启动kubernetes管理服务

|

systemctl restart kube-apiserver systemctl restart kube-controller-manager systemctl restart kube-scheduler |

[root@k8s-master ~]# systemctl restart kube-apiserver.service

[root@k8s-master ~]# systemctl restart kube-controller-manager.service

[root@k8s-master ~]# systemctl restart kube-scheduler.service

[root@k8s-master ~]# kubectl -s http://127.0.0.1:10000 get node

NAME STATUS AGE

10.0.0.120 Ready 28m

10.0.0.130 Ready 27m[root@k8s-registry ~]# systemctl start kube-apiserver.service

[root@k8s-registry systemctl start kube-controller-manager.service

[root@k8s-registry ~]# systemctl start kube-scheduler.service

[root@k8s-registry ~]# systemctl enable kube-scheduler.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service.

[root@k8s-registry ~]# systemctl enable kube-apiserver.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/systemd/system/kube-apiserver.service.

[root@k8s-registry ~]# systemctl enable kube-controller-manager.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service.

[root@k8s-registry ~]# kubectl -s http://127.0.0.1:10000 get node

NAME STATUS AGE

10.0.0.120 Ready 27m

10.0.0.130 Ready 27mstep 5 测试

[root@k8s-master ~]# cd k8s_yaml/Deployment/

[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://127.0.0.1:10000 create -f k8s_deploy_nginx.yml

deployment "nginx" created

[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://127.0.0.1:10000 get all

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx 4 4 4 0 6s

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kubernetes 10.100.0.1 <none> 443/TCP 41m

NAME DESIRED CURRENT READY AGE

rs/nginx-3790091926 4 4 4 6s

NAME READY STATUS RESTARTS AGE

po/nginx-3790091926-8243n 1/1 Running 0 6s

po/nginx-3790091926-8zkvc 1/1 Running 0 6s

po/nginx-3790091926-c2rn6 1/1 Running 0 6s

po/nginx-3790091926-k2s96 1/1 Running 0 6s[root@k8s-registry ~]# kubectl -s http://127.0.0.1:10000 get all

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx 4 4 4 0 10s

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kubernetes 10.100.0.1 <none> 443/TCP 41m

NAME DESIRED CURRENT READY AGE

rs/nginx-3790091926 4 4 4 10s

NAME READY STATUS RESTARTS AGE

po/nginx-3790091926-8243n 1/1 Running 0 10s

po/nginx-3790091926-8zkvc 1/1 Running 0 10s

po/nginx-3790091926-c2rn6 1/1 Running 0 10s

po/nginx-3790091926-k2s96 1/1 Running 0 10s四、 keepalived高可用

step 1 安装keepalived服务

| yum install -y keepalived |

[root@k8s-master ~/k8s_yaml/Deployment]# yum install -y keepalived.x86_64

......

Installed:

keepalived.x86_64 0:1.3.5-19.el7

Dependency Installed:

net-snmp-agent-libs.x86_64 1:5.7.2-49.el7_9.1 net-snmp-libs.x86_64 1:5.7.2-49.el7_9.1

Dependency Updated:

ipset.x86_64 0:7.1-1.el7 ipset-libs.x86_64 0:7.1-1.el7

Complete![root@k8s-registry ~]# yum install keepalived.x86_64 -y

......

Installed:

keepalived.x86_64 0:1.3.5-19.el7

Dependency Installed:

net-snmp-agent-libs.x86_64 1:5.7.2-49.el7_9.1 net-snmp-libs.x86_64 1:5.7.2-49.el7_9.1

Dependency Updated:

ipset.x86_64 0:7.1-1.el7 ipset-libs.x86_64 0:7.1-1.el7

Complete!step 2 配置keepalive

|

# /etc/keepalived/keepalived.conf global_defs {

router_id 名称

} vrrp_instance 名称 {

state 角色 #MASTER和BACKUP

interface 接口

virtual_router_id 数字ID

priority 优先级

authentication {

auth_type PASS

auth_pass 密码

}

virtual_ipaddress {

IP地址

}

} |

[root@k8s-master ~/k8s_yaml/Deployment]# grep -Ev '^$' /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL_110

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 120

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.10

}

}

[root@k8s-master ~/k8s_yaml/Deployment]# cd /etc/keepalived/

[root@k8s-master /etc/keepalived]# scp -rp keepalived.conf 10.0.0.140:`pwd`

root@10.0.0.140's password:

keepalived.conf [root@k8s-registry ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL_120

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.10

}

}step 3 启动keepalived服务

| systemctl restart keepalived |

[root@k8s-master /etc/keepalived]# systemctl enable keepalived.service

Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service.

[root@k8s-master /etc/keepalived]# systemctl start keepalived.service

[root@k8s-master /etc/keepalived]# ip addr show eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:87:0b:5c brd ff:ff:ff:ff:ff:ff

inet 10.0.0.110/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet 10.0.0.100/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe87:b5c/64 scope link

valid_lft forever preferred_lft forever[root@k8s-registry ~]# systemctl enable keepalived.service

Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service.

[root@k8s-registry ~]# systemctl start keepalived.service

[root@k8s-registry ~]# ip addr show eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:3a:d7:1f brd ff:ff:ff:ff:ff:ff

inet 10.0.0.140/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe3a:d71f/64 scope link

valid_lft forever preferred_lft foreverstep 4 调整kubelet

|

# /etc/kubernetes/kubelet KUBELET_API_SERVER="--api-servers=http://VIP地址:端口" #指定API Server的地址与端口 # /etc/kubernetes/config KUBE_MASTER=="--master=http://VIP地址:端口" #指定API Server的地址与端口 |

[root@k8s-node01 ~]# grep 10.0.0.100 /etc/kubernetes/kubelet

KUBELET_API_SERVER="--api-servers=http://10.0.0.100:10000"

[root@k8s-node01 ~]# sed -i s#"--master=http://10.0.0.110:10000"#"--master=http://10.0.0.100:10000"# /etc/kubernetes/config

[root@k8s-node01 ~]# tail -2 /etc/kubernetes/config

# How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="--master=http://10.0.0.100:10000"

[root@k8s-node01 ~]# systemctl restart kubelet.service

[root@k8s-node01 ~]# systemctl restart kube-proxy.service [root@k8s-node02 ~]# sed -i s#http://10.0.0.110:10000#http://10.0.0.100:10000# /etc/kubernetes/kubelet

[root@k8s-node02 ~]# grep 10.0.0.100 /etc/kubernetes/kubelet

KUBELET_API_SERVER="--api-servers=http://10.0.0.100:10000"

[root@k8s-node02 ~]# tail -2 /etc/kubernetes/config

# How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="--master=http://10.0.0.100:10000"

[root@k8s-node02 ~]# systemctl restart kubelet.service

[root@k8s-node02 ~]# systemctl restart kube-proxy.service

[root@k8s-node02 ~]# kubectl -s http://10.0.0.100:10000 get node

NAME STATUS AGE

10.0.0.120 Ready 2h

10.0.0.130 Ready 2hstep 5 验证

[root@k8s-registry ~]# etcdctl cluster-health

failed to check the health of member 2a864251cde86ff0 on http://10.0.0.110:2379: Get http://10.0.0.110:2379/health: dial tcp 10.0.0.110:2379: connect: no route to host

member 2a864251cde86ff0 is unreachable: [http://10.0.0.110:2379] are all unreachable

member c1583a2151ea4303 is healthy: got healthy result from http://10.0.0.140:2379

member fc5b819f06c6532e is healthy: got healthy result from http://10.0.0.120:2379

cluster is degraded

[root@k8s-registry ~]# kubectl -s http://127.0.0.1:10000 get nodes

NAME STATUS AGE

10.0.0.120 Ready 2h

10.0.0.130 Ready 2h

[root@k8s-registry ~]# ping 10.0.0.110

PING 10.0.0.110 (10.0.0.110) 56(84) bytes of data.

From 10.0.0.140 icmp_seq=1 Destination Host Unreachable

From 10.0.0.140 icmp_seq=2 Destination Host Unreachable

^C

--- 10.0.0.110 ping statistics ---

3 packets transmitted, 0 received, +2 errors, 100% packet loss, time 2001ms

pipe 2